Table of Contents

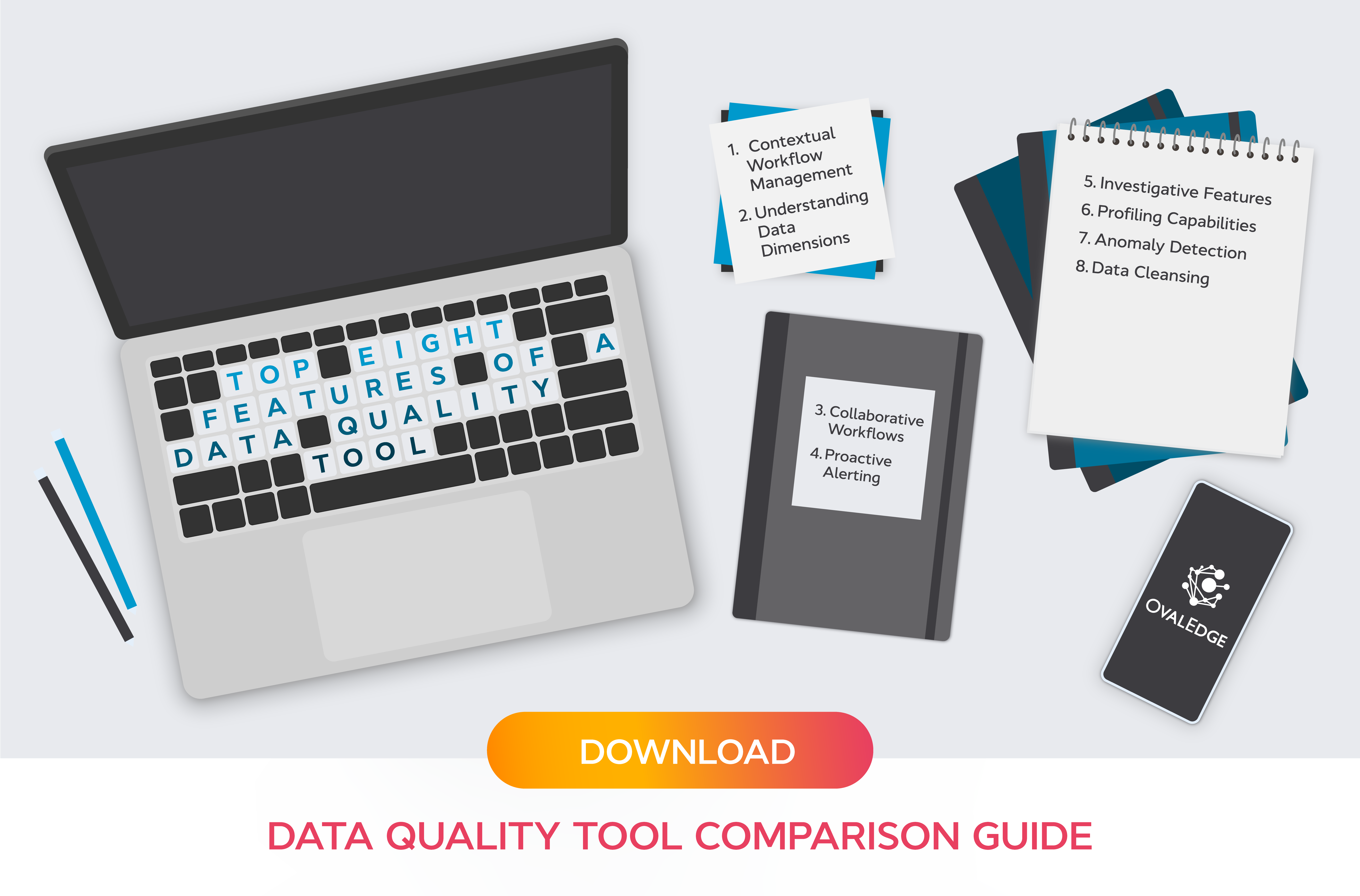

Top 8 Features of a Data Quality Tool

High-quality data is a cornerstone of data governance, but many companies still fail to implement ways to ensure it. The best way to boost data quality is with a dedicated tool. In this blog, we’ll run through the key features of a data quality tool so you can find the best fit for you.

If your data isn't high quality, it's worthless. Bad data quality means bad analytics, leading to bad business decisions that harm your organization. That's why ensuring your data remains of the highest quality is essential. We can divide data quality tools into two categories:

- Source level tools: The objective of source level tools is to ensure that data is well understood and of high quality at the source and whenever it's moved and transformed, these transformations are visible, well-understood, and don't pose operational challenges.

- Downstream tools: These tools are required when companies cannot enforce data quality at source or if it diminishes during transformation. These tools are considered data cleansing and master data management tools.

Related Post: Best Practices for Improving Data Quality

How Can a Data Quality Tool Support Your Data Strategy?

A good data strategy shouldn’t include assumptions that data manipulation is necessary. The best outcome is to ensure data quality at the source, but this isn’t always possible, and can sometimes be overly cumbersome. That’s why you need a tool that provides both source level and downstream capabilities.

Ensuring data quality involves navigating a complex ecosystem, where data is moved from one place to another and between numerous users and departments. A data quality tool will help support various aspects of data quality in an organization, but to succeed, it must lead to three core outcomes:

- It must support collaboration between business and IT (Data Operations) departments.

- It must support the Data Operations team in maintaining the data pipeline and ecosystem.

- It must support data manipulation to achieve successful business outcomes.

In this blog, we'll walk you through the most critical features of a data governance tool that can tackle source level and downstream issues and demonstrate how these features support the core outcomes we've highlighted above.

Related Post: Data Governance and Data Quality: Working Together

Top 8 Features of a Full Spectrum Data Quality Tool

1. Contextual Workflow Management

When users report a data quality issue with a data asset, this report should be automatically directed to the correct stakeholders responsible for maintaining that specific asset. This process must be aligned with the context of the data, the issue at hand, and the correct person or team to tackle it.

2. Understanding Data Dimensions

It's not easy to gauge the quality of data. There are numerous data quality dimensions to consider, including accuracy, completeness, consistency, validity, timeliness, uniqueness, and integrity. A good data quality tool will help business users understand the various dimensions of data quality so they can make better judgments on how and when to escalate issues.

3. Collaborative Workflows

A data quality tool must enable collaboration between the technical and non-technical stakeholders within the data pipeline and create a workflow between the various business entities to resolve specific issues. It should enable business users and data operations teams to collaborate, which works both ways.

When either side finds an issue, they can work together to fix it. As such, the workflow engine that enables this collaboration overlaps departments, roles, and responsibilities.

4. Proactive Alerting

For Data Operations teams, proactive alerting is essential. They need to have proactive alerts in place so they can be notified immediately when a problem arises.

Proactive alerting features minimize the impact of a data quality issue because problems can be dealt with quickly before they infiltrate further into a company's data ecosystem.

5. Investigative Features

Data Operations teams must have the capacity to investigate alerts and reports by looking into data pipelines, lineage, and more.

6. Profiling Capabilities

Data profiling features enable users to ingest and understand the data in their ecosystem quickly and at scale.

7. Anomaly Detection

With anomaly detection, technical teams can utilize AI/ML-enabled features to identify any data anomalies proactively. This out-of-the-box solution uses intelligent automation to search for discrepancies in the data.

8. Data Cleansing

From a business perspective, it's important that a data quality tool enables organizations to correct issues with the data once they have been surfaced. Data cleansing, or Master Data Management (MDM), provides users with the means to stay on top of data as it's ingested and fix errors with issues like duplication.

The best tools offer AI-based data cleansing tools that identify where there are errors and correct them.

Related Post: How to Manage Data Quality

The OvalEdge Solution

OvalEdge is a comprehensive data governance platform. Within OvalEdge are a set of dedicated data quality improvement tools that enable you to measure, assess, and evaluate the quality of your data and take proactive steps to fix any data quality issues on an ongoing basis. OvalEdge provides all the capabilities, both at source level and downstream, that you need to ensure high-quality data.

Utilize this free self-service tool to identify root causes of poor data quality and learn what needs fixing. Download now.