Table of Contents

How to Manage Data Quality via Frameworks

Data quality management is an essential element of data governance. However, to undertake it effectively, you need a dedicated tool. In this article, we explain what data quality management is and how to implement you data quality improvement strategy.

You're a pilot whose navigation system is fed with inaccurate data. You may fly in the wrong direction or, worse, crash. Similarly, poor data quality can lead to incorrect conclusions and poor decision-making, negatively impacting a company. Research by IBM found that in the US alone, $3.1 trillion is lost annually due to poor data quality.

Data scientists spend most of their time cleaning data sets that have been neglected at this crucial stage. Not only is this a waste of precious time, but it also creates another problem. When data is cleaned later on, many assumptions are made that may distort the outcome. Yet, data scientists have no choice but to make these assumptions. Sometimes there is no option but to fix data quality issues downstream, but the best practice is to address data quality at the source.

Data Quality Vs. Data Cleansing

Despite often being confused as the same, data quality and cleansing are different. They are distinct processes with different objectives.

While Data cleansing is identifying and correcting errors and inconsistencies in data, data quality is the overall fitness of data for its intended use. In this blog, we‘ll talk about how to improve the overall data quality so that data cleansing is not required in the first place.

When you set out to assess the quality of the data in your organization, there are several key dimensions that you must consider. They are accuracy, completeness, consistency, validity, timeliness, uniqueness, and integrity. These measurements are obtained from data profiling and validation, among other measurement methods. Consistently measuring data quality helps keep track of the program’s progress. These dimensions and their measurement are discussed in detail in the article below.

Read more: What is Data Quality? Dimensions & Their Measurement

In this blog, we’ll discuss various methodologies, frameworks, and tools that exist to improve data quality.

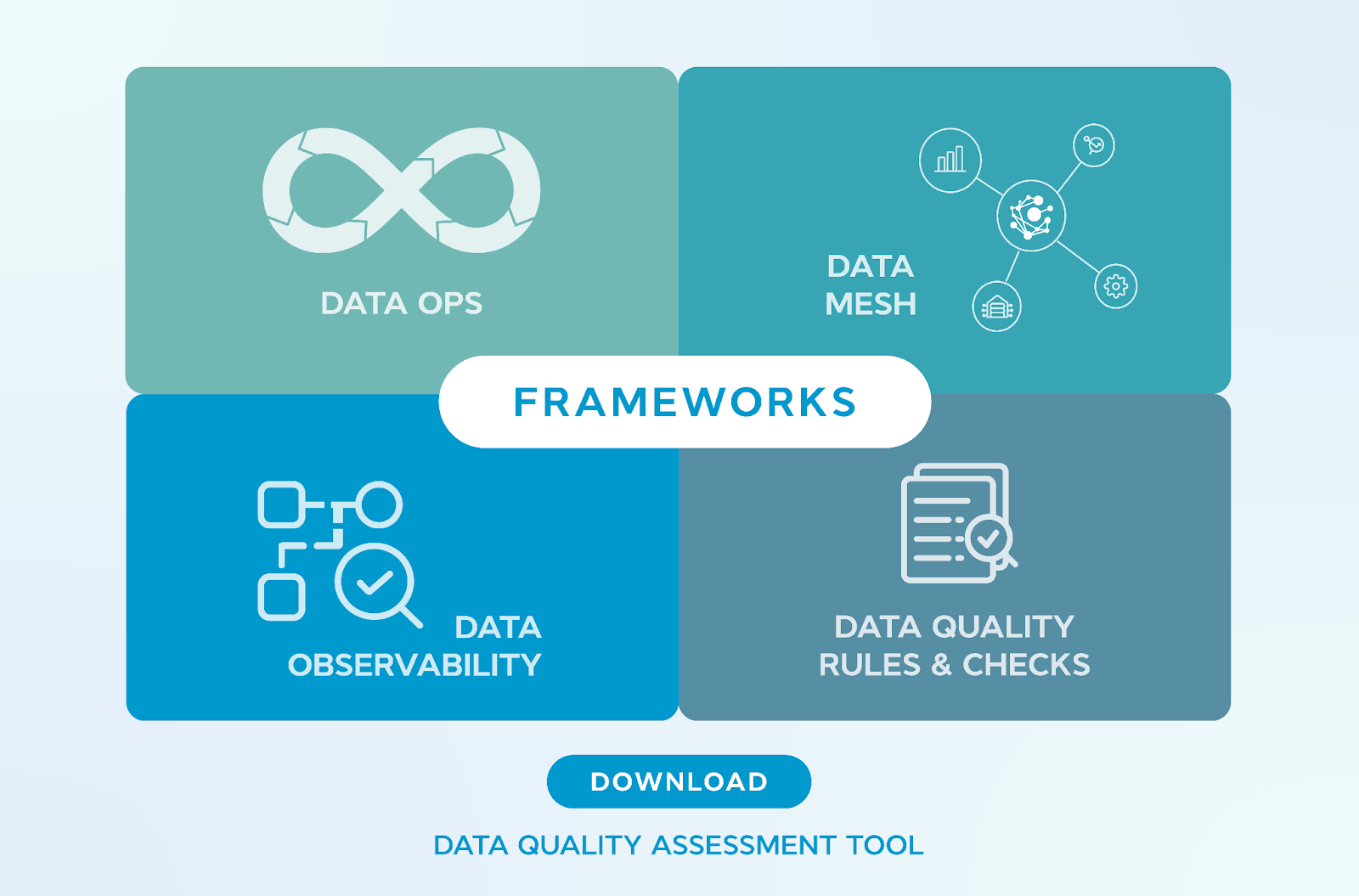

Click the image above to download the Data Quality Assessment Tool.

Scope of Data Quality Management

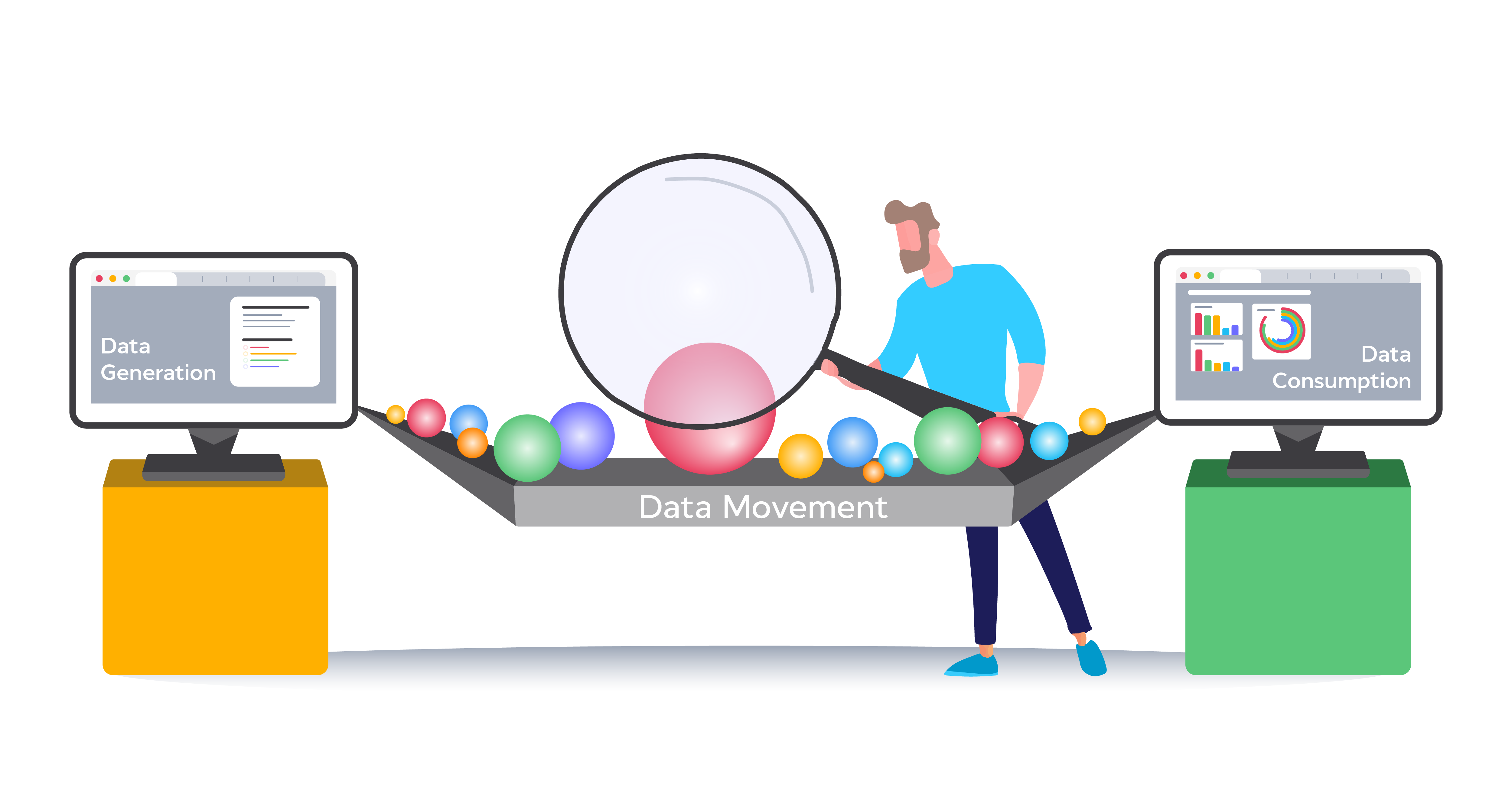

Before we talk about improving data quality, let's first understand the entire scope of data and where data quality goes bad.

There are three phases of data quality to consider- data generation, data movement, and data consumption. Companies will likely identify various dimensions within these phases that align with their data quality pain points.

Data Generation

Data is generated through various means, including business applications such as ERP, CRM, and marketing systems, as well as through machines such as sensors, computers, and servers.

The criticality and business value of the data vary, with data from applications like ERP and CRM being considered the most critical. In contrast, sensor data may be regarded as less critical depending on the specific business needs.

One of the main objectives of a data quality program is to ensure that data is high-quality when it is first generated to support effective decision-making and business operations.

Data Movement

Data movement refers to transferring data from one application to another for various business reasons, such as completing business processes or supporting decision-making. This movement often occurs when decision-making processes involve data from multiple applications. Data can be moved from one application to another using three prevalent methods:

-

Integration: Data is moved through APIs or during transactions.

-

Data Pipelines: Data is moved in bulk from one system to another.

-

Business Processes: Data is moved manually as part of a business process, with the process itself creating incorrect or duplicate records.

It's important to note that data quality should be considered throughout the data movement process to ensure that data remains accurate, consistent, and reliable as it is moved from one system to another.

Data Consumption

Data is the lifeblood of modern business operations, driving everything from targeted marketing campaigns to forecasting future trends. As data is consumed and analyzed, it's essential to remember that not all data is created equal.

Data cleaning, augmentation, and integration can all play a role in determining the accuracy and value of the information being consumed. Failure to consider these factors can lead to poor decision-making, missed opportunities, and wasted resources. To harness the power of data, consuming it and understanding its entire lifecycle is crucial.

Why does data quality suffer?

Now that we know where data quality goes bad, let's understand why.

As we understand from the scope, data quality can suffer for different reasons in different phases. That's why those reasons need to be understood in their context.

Application data capture does not have proper system controls and business controls

Mostly, when the end-user selects/fills a value in any form, it does not go through system and business controls for all fields. Let's take an example. If we ask for an email address, it could be invalid. So, we have system controls to check the email address format and business controls validating the email address by sending an email. Hence, we ensure that the email address is entirely accurate.

However, it is not always easy to establish a business process for validation for all fields like address and phone number. On the one hand, a passport requires sending documents to confirm information. On the other hand, it would be harder to validate an address since you might not get a response.

Machines do not capture all the relevant data

There are hundreds of applications, censors, servers, etc., which generate data. Many times, it doesn’t have consistent definitions and customizable controls. These sensors and applications mainly capture and generate data the application designers thought was capture-worthy.

Integrations are designed to ensure data availability, while data quality is an afterthought

We design Integrations to transfer data from one system to another. If this process fails, you are notified of error messages and can fix the issue. Data quality, however, is not taken into account when designing integrations. Poor quality data is easily transferred from one system to another, compounding data quality issues.

Pipelines do not have proper system controls and business controls

Sometimes, pipelines break as data is dynamic and systems are not designed consistently. This is one of the primary reasons for data quality problems.

For example, a data warehouse is designed to carry a field of length 100, while the source system is designed to capture 120. When you were testing with all the data, the pipeline didn’t break because you did not have a field value larger than 100. A new transaction gets created with a field value larger than 100. Hence, the pipeline breaks producing incorrect results and destroying the output quality.

Business processes are not designed from a data perspective

Sometimes, duplicate business processes emerge for efficiency but do not consider the data perspective. This creates lots of duplicate data in multiple systems.

For example, marketing and sales teams manage one set of customer records, while invoicing and financial processes maintain duplicate records.

Data quality suffers for many more reasons, but the above are the most common.

Different frameworks to manage data quality

Data quality is a multifaceted problem that requires a comprehensive framework to address the various challenges that arise from multiple departments, tools, and processes. Simply creating a data quality or governance group alone would not solve the entire problem. The root cause of bad data quality can be attributed to various factors such as lack of standardization, poor communication, and inefficient processes.

DataOps

DataOps is an approach that has been gaining popularity in recent years as a solution to the complex problem of managing data. It is a framework that adapts DevOps principles, a methodology used in software development to improve collaboration and communication between different teams and departments.

In the context of data management, DataOps aims to create a streamlined and efficient process for managing data, from the moment it is collected and stored all the way to analyzing and reporting on it.

Data Observability

Another approach that can be used to improve data quality is Data Observability, which involves collecting and analyzing data on the performance of data systems, such as data pipelines, to identify and resolve issues. This approach can help organizations better understand how their data systems are working, and identify areas that need improvement.

Data Mesh

The Data Mesh is another approach that is gaining traction, which is a way of designing and building data-driven systems that are modular, scalable, and resilient. Data Mesh aims to enable teams to work independently and autonomously while also ensuring data consistency and quality.

Data Quality Rules / Checks

Finally, Data Quality Checks can be used to ensure that data is accurate, complete, and consistent. This can include checks for missing data, duplicate data, and data in the wrong format. Automated checks can identify and flag any issues, which can then be reviewed and resolved by the relevant teams.

In conclusion, no single framework solves the problem of bad data quality, but various approaches, tools, and frameworks are evolving to address the multifaceted problem. It is essential for organizations to take a holistic approach and adopt a combination of different strategies, such as Data Ops, Data Observability, Data Mesh, and Data Quality Checks to improve the quality of their data.

Why is data governance a must?

Despite the differences in focus, one common thing among all the frameworks mentioned above is the need for a unified governance structure. This structure ensures data is used and managed consistently and competently across all teams and departments. A federated governance or unified governance approach can help ensure data quality, security, and compliance while allowing teams to work independently and autonomously.

As we mentioned at the start, data quality is one of the core outcomes of a data governance initiative. As a result, a key concern for data governance teams, groups, and departments is improving the overall data quality. But there is a problem: coordination.

The data governance and quality team may not have the magic wand to fix all problems. Still, they are the wizards behind the curtain, guiding various teams to work together, prioritize, and conduct a thorough root cause analysis. A common-sense framework they use is the Data Quality Improvement Lifecycle (DQIL).

To ensure the DQIL's success, a tool must be in place and include all teams in the process to measure and communicate their efforts back to management. Generally, this platform is combined with the Data Catalog, workflows, and collaboration to bring everyone together so that Data Quality efforts can be measured.

Data quality improvement lifecycle

The Data Quality Improvement Lifecycle (DQIL) is a framework of predefined steps that detect and prioritize data quality issues, enhance data quality using specific processes, and route errors back to users and leadership so the same problems don’t arise again in the future.

The steps of the data quality improvement lifecycle are:

- Identify: Define and collect data quality issues, where they occur, and their business value.

- Prioritize: Prioritize data quality issues by their value and business impact.

- Analyze for Root Cause: Perform a root cause analysis on the data quality issue.

- Improve: Fix the data quality issue through ETL fixes, process changes, master data management, or manually.

- Control: Use data quality rules to prevent future issues.

Overall data quality improves as issues are continuously identified and controls are created and implemented.

Learn more about Best Practices for Improving Data Quality with the Data Quality Improvement Lifecycle

Wrap up

So, you now know where and why bad quality data can occur, emerging frameworks to manage data quality, and the data quality improvement lifecycle to manage all the data quality problems. But one important thing is to socialize the data quality work inside the company so that everyone knows how we are improving the data quality.

At OvalEdge, we can do this by housing a data catalog and managing data quality in our unified platform. OvalEdge has many features to tackle data quality challenges and implement best practices. The data quality improvement lifecycle can be wholly managed through OvalEdge, capturing the context of why a data quality issue occurred. Data quality rules monitor data objects and notify the correct person if a problem arises. You can communicate to your users which assets have active data quality issues while the team works to resolve them.

Track your organization’s data quality based on the number of issues found and their business impact. This information can be presented in a report to track monthly improvements to your data’s quality. With OvalEdge we can help you achieve data quality at source level, following best practice, but if any downstream challenges exist, our technology can help you address and overcome them.

.png?width=629&height=398&name=unnamed%20(4).png)