Table of Contents

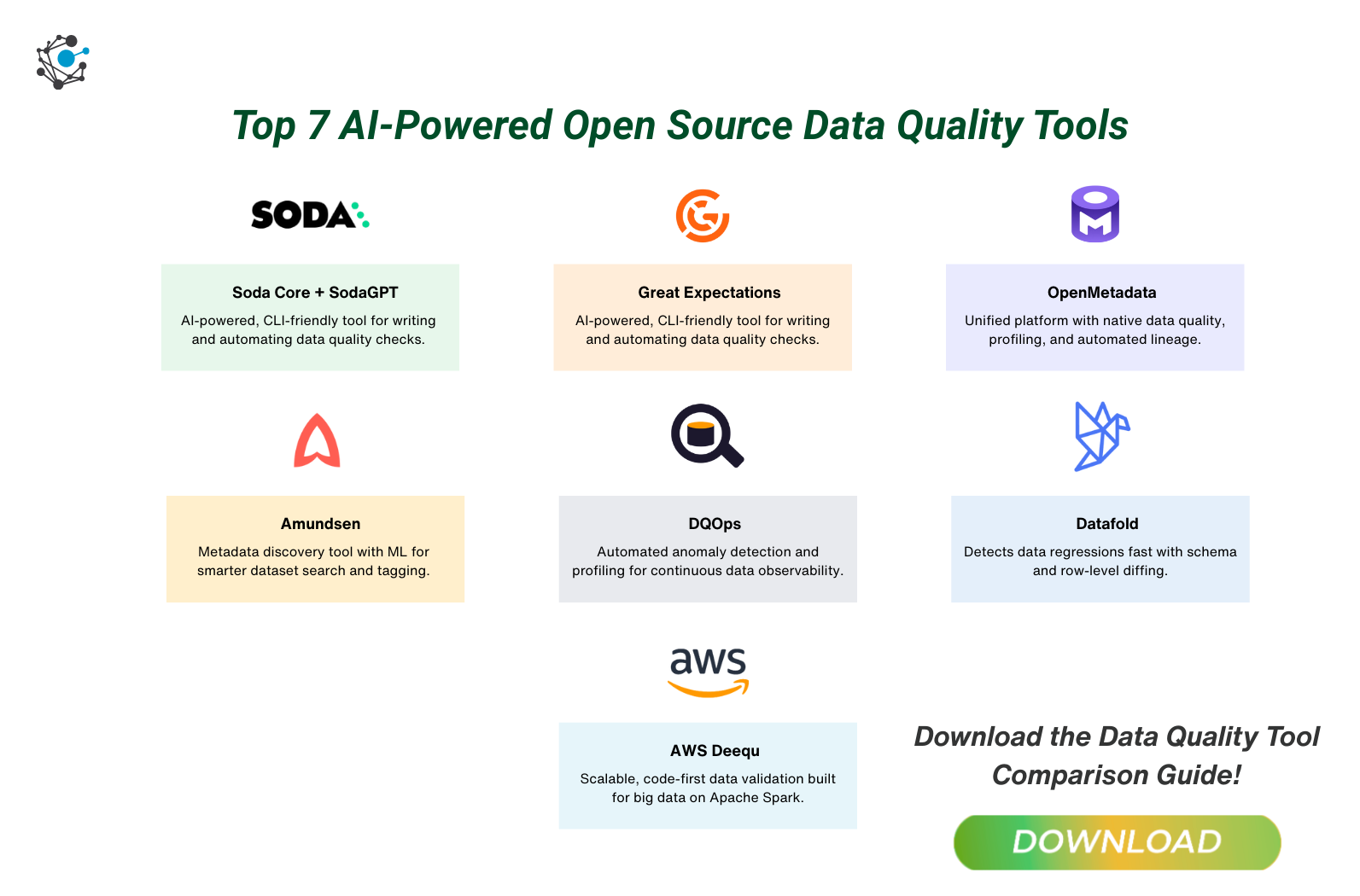

Top 7 AI-Powered Open-Source Data Quality Tools in 2025

Open source data quality tools are evolving fast and AI is accelerating that evolution. By combining the flexibility of open frameworks with AI-powered features like anomaly detection and automated rule creation, these tools offer a low-barrier entry point for organizations aiming to improve data reliability. But this doesn’t always translate to enterprise readiness. Most tools focus on isolated capabilities, i.e.profiling, validation, or monitoring while lacking critical support for governance, metadata management, and business alignment. As adoption rises, so does the need to distinguish between lightweight experimentation and platforms that can support scale, complexity, and regulatory compliance. This guide evaluates seven of the most notable AI-powered open source data quality tools, exploring what they do well, where they fall short, and what teams need to consider before adopting them in production

As data becomes the backbone of business operations, its quality has a direct impact on decision-making, compliance, and competitiveness. Still, many organizations rely on fragmented rules or isolated validations to manage data trust—often without visibility, automation, or accountability.

A McKinsey study found that 72% of B2B companies struggle with effective data management, citing issues like poor visibility, inconsistent definitions, and decentralized ownership. Compounding the issue, over 80% of enterprises continue to make decisions based on stale or outdated data, according to a survey highlighted by Business Wire.

AI-powered data quality tools aim to bridge this gap. With features like anomaly detection, rule recommendation, and profiling automation, they represent a shift from static rule sets to dynamic intelligence. And thanks to open-source innovation, many of these tools are now available without enterprise software costs.

But not all open-source tools are built for scale. This guide helps you distinguish early-stage experimentation from production-ready capability—and determine when open source is enough, and when it's time for more.

AI in data quality: What "AI-powered" really means

“AI-powered” is often used broadly—but in data quality, it means more than automation. It refers to intelligent systems that can adapt to data trends, detect issues without predefined thresholds, recommend validation rules, and enrich metadata for greater traceability. Some tools even offer natural language interfaces to help non-technical users define checks with ease.

Core AI capabilities that define a truly intelligent data quality tool include:

-

Anomaly Detection: Identifies outliers and shifts based on historical trends, not static thresholds.

-

Automated Data Profiling: Uses ML to analyze data structure, patterns, and completeness without manual scripts.

-

Natural Language Rule Creation: Converts human-readable test instructions into executable checks.

-

Self-Learning Validation: Continuously improves rule recommendations based on how your data evolves.

-

Metadata Enrichment: Automatically enhances context around data, supporting better discovery and governance.

Many open-source tools offer some & not all of these features. Especially when it comes to production-scale usage, the gaps become more apparent.

Top 7 AI-powered open-source data quality tools

The surge in open source data quality tools has empowered engineering and data teams to enforce data quality without the overhead of commercial platforms. With the addition of AI-driven features such as anomaly detection, profiling, and natural language check creation, these tools are increasingly capable—but their depth and enterprise readiness vary. Below are seven of the most promising AI-enhanced open-source solutions available today.

1. Soda Core + SodaGPT

Soda Core is a command-line tool designed to help engineers write tests and monitor the health of data pipelines. With the addition of SodaGPT, users can now generate data quality checks using natural language, powered by large language models.

Key Features:

-

No-code check generation via AI (SodaGPT)

-

CLi and YAML-based implementation

-

Integration with dbt, CI/CD workflows, and Airflow

Biggest Limitations:

-

Lacks built-in metadata management or data lineage tracking

-

Governance and role-based access controls are minimal in the open-source tier

Great Expectations (GX)

Great Expectations (GX) is one of the most mature open-source data quality tools. It allows teams to create human-readable tests—called “expectations”—that validate data integrity at scale. The tool has introduced AI-assisted expectation generation, helping automate test creation.

Key Features:

-

A library of 300+ expectations and customizable tests

-

Strong community, documentation, and flexible integrations

-

AI-generated test suggestions to accelerate onboarding

Biggest Limitations:

-

No native support for real-time or streaming data validation

-

Governance, anomaly detection, and observability require third-party integrations

OpenMetadata

OpenMetadata is a modern metadata platform combining discovery, lineage, data quality, and governance. It incorporates machine learning to automate rule suggestions, profiling, and anomaly detection, making it one of the most feature-rich open tools available.

Key Features:

-

AI-powered profiling and column-level quality checks

-

Automated lineage generation and metadata enrichment

-

Native support for tests like null checks, freshness, and value distributions

Biggest Limitations

-

Deployment can be complex and resource-intensive

-

Still maturing in community adoption and production stability

Amundsen (with ML Extensions)

Developed by Lyft, Amundsen is primarily a metadata discovery tool with some ML capabilities added via extensions—like ranking datasets based on usage relevance. While not a full-fledged data quality solution, it’s often used as a complementary layer for discovery.

Key Features:

-

ML-enhanced search and auto-tagging of datasets

-

Lightweight and simple to deploy in smaller environments

-

Community-driven roadmap and good search UX

Biggest Limitations:

-

No built-in data quality monitoring or test framework

-

Governance and compliance features are minimal or absent

DQOps

DQOps is an open-source data observability tool that supports continuous monitoring and anomaly detection using machine learning. It focuses on automating checks for data volume, freshness, completeness, and schema consistency.

Key Features:

-

ML-based anomaly detection for scheduled scans

-

Integration with JDBC-compatible data sources

-

Profiles datasets for distributions, nulls, and uniqueness

Biggest Limitations:

-

Basic UI and developer experience compared to alternatives

-

A smaller ecosystem and newer community mean slower support cycles

Datafold (Open-Source Diff Tool)

Datafold is best known for its commercial data diff and observability features. Its open-source version allows data engineers to compare datasets across environments—useful during schema migrations or code changes.

Key Features:

-

Schema and row-level diffs to prevent pipeline breakage

-

Integrations with dbt, GitHub Actions, and CI/CD pipelines

-

Efficient detection of data regressions during development

Biggest Limitations:

-

Most AI-driven features like column-level lineage and diff impact analysis are commercial-only

-

No profiling, anomaly detection, or governance capabilities in the open version

7. Deequ

Created by Amazon AWS, Deequ is a library built on Apache Spark that lets users define unit tests for data. While it doesn't include AI-driven features, it remains popular for its scalability and flexibility in big data environments.

Key Features:

-

Metric-based validation for completeness, uniqueness, and custom constraints

-

Strong scalability for batch workloads on Spark

-

Fully programmable for advanced rule logic

Biggest Limitations:

-

No built-in user interface or workflow orchestration

-

Lacks AI or adaptive learning capabilities

Comparing open-source data quality tools

While open-source data quality tools offer a solid foundation, their capabilities vary significantly. Some excel in AI-powered anomaly detection and metadata management, while others prioritize AI-powered automation or ease of deployment.

The table below provides a side-by-side comparison of the most critical features across leading AI-powered open-source data quality tools.

%20(1).png?width=719&height=539&name=Comparing%20top%20seven%20open%20source%20data%20quality%20tools(Soda%20Core%2c%20Great%20Expectations%2c%20OpenMetadata%2c%20Amundsen%2c%20DQOps%2c%20Datafold%2cDeequ)%20(1).png)

Key challenges in open-source data governance tools

While open-source data quality tools offer a strong foundation, our analysis reveals several challenges that organizations must navigate. Here are the key takeaways from the analysis:

Functional gaps across the data quality lifecycle

Most tools address isolated aspects of data quality—such as validation, anomaly detection, or profiling—but rarely deliver comprehensive coverage. Core elements like automated lineage tracking, metadata enrichment, and policy-based governance are often absent or underdeveloped. This creates blind spots and requires teams to patch together multiple components for even basic coverage.

Related Post: How to Manage Data Quality via Frameworks

Governance and policy enforcement are largely missing

Open-source tools consistently lack embedded governance capabilities. Role-based access, data classification, auditability, and compliance policy enforcement are either unsupported or require significant custom development. As a result, it's difficult to operationalize data quality in environments that are regulated, multi-tenant, or business-facing.

AI features are isolated, not systemic

While some AI capabilities are emerging—like anomaly detection or natural language–based check creation—they tend to exist in silos. These features don’t yet extend into rule evolution, adaptive monitoring, or intelligent remediation workflows. Without system-wide intelligence, teams remain reliant on manual tuning and static logic.

AI capabilities are limited

Metadata management, a foundational layer for data quality, is weak or non-existent in most tools. Without automated metadata capture, context propagation, and cross-system lineage, organizations face difficulty tracing root causes, understanding data usage, or supporting impact analysis—all essential for trustworthy reporting and decision-making.

Engineering integration is strong, but doesn’t scale to business needs

While many tools support CI/CD pipelines and developer workflows, they are not optimized for business users, compliance officers, or data stewards. There’s a lack of intuitive interfaces, documentation portals, or low-code configuration. This limits adoption beyond technical teams and reinforces silos between data producers and consumers.

Solving for scale: Why the right platform still matters

Open source data quality tools are a vital part of modern data ecosystems. They enable teams to validate data early, experiment with automation, and improve engineering agility. But as organizations scale—across data volume, user roles, business units, and regulatory requirements—the cracks begin to show.

What starts as a tactical solution for developers quickly becomes difficult to govern, extend, and operationalize across the enterprise. Teams struggle to move from detection to resolution without lineage, metadata context, or embedded policy controls. Meanwhile, the burden of integration grows—requiring engineering resources to stitch together anomaly detection, profiling, governance, and monitoring across disconnected tools.

Scaling data quality isn’t just about adding more rules or checks. It’s about ensuring those checks are traceable, governed, automated, and aligned with business needs. That shift requires more than toolkits—it requires a platform.

How OvalEdge bridges the gap

OvalEdge is designed to solve the exact challenges that open-source tools struggle with as organizations scale. Instead of piecing together point solutions, OvalEdge brings AI-driven intelligence, governance, and usability into one integrated platform.

- End-to-end lineage and metadata context: Automatically map data lineage across systems, enriching it with business and technical metadata to enable root cause analysis and impact tracking.

Learn more about our automated data lineage tool. - AI-powered data quality framework: Detect anomalies, generate rules, and prioritize data quality issues using machine learning—not just scripts.

- Policy-driven governance: Enforce data access, masking, and validation policies through RBAC and ABAC frameworks. Maintain audit trails and comply with regulations like GDPR and HIPAA by design.

- Integrated with your ecosystem: Connect to 150+ systems across cloud, on-prem, and SaaS environments—minimizing custom development and maximizing interoperability.

- Built for both technical and business users: Offer role-based interfaces, low-code configuration, and intuitive workflows to ensure adoption across engineering, analytics, and compliance teams.

By consolidating data quality, discovery, governance, and metadata into one platform, OvalEdge enables organizations to trust their data—at scale, with confidence.

Learn more about OvalEdge's unified data quality platform

Final thoughts

AI-powered open source data quality tools have helped democratize data validation, making it faster and more accessible for engineering teams. But as organizations scale, these tools often fall short in addressing governance, metadata lineage, and policy enforcement.

The solution isn't to abandon open source, it’s to know when to extend beyond it. Open tools can form a strong foundation, but platforms like OvalEdge are built to scale that foundation across your enterprise.

By combining intelligent automation, governance, lineage, and policy controls in one environment, OvalEdge helps ensure that data quality isn’t just an IT concern—it becomes a business enabler. Whether you're moving from experimentation to orchestration, or scaling from team-level to enterprise-wide trust, OvalEdge gives you the framework to move forward with confidence.